一:封锁User-agent破解

user-agent是浏览器的身份标识。网站通过user-agent来确定浏览器的类型的。可以通过事前准备一大堆的user-agent,然后随机挑选一个使用,使用一次更换一次,这样就解决问题喽。

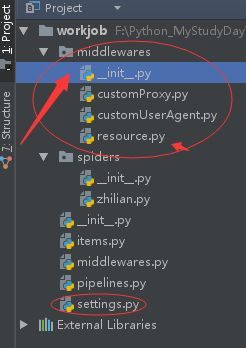

创建文件资源resource.py和中间文件customUserAgent.py

resource.py的文件内容:

# -*- coding: utf-8 -*-

USER_AGENT_LIST = [ \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", \

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", \

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", \

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", \

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", \

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", \

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", \

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", \

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", \

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/43.0.2357.132 Safari/537.36", \

"Mozilla/5.0 (Windows NT 6.1; WOW64; rv:41.0) Gecko/20100101 Firefox/41.0"

]customUserAgent.py里 的内容:

# -*- coding: utf-8 -*-

from scrapy.contrib.downloadermiddleware.useragent import UserAgentMiddleware

from workjob.middlewares.resource import USER_AGENT_LIST

import random

class RandomUserAgent(UserAgentMiddleware):

def process_request(self, request, spider):

ua = random.choice(USER_AGENT_LIST)

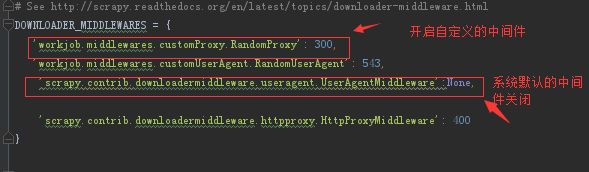

request.headers.setdefault('User-Agent', ua)不要忘记设置setting了。

二:封锁ip的破解

同一个ip短时间访问同一个站点,如果数目少,管理员或许会放你一马。但是如果数量多的话,那肯定是爬虫了。因此最方便的就是代理了。我是由于之前写过一个爬取代理网站的爬虫,直接判断哪些有效的ip代理存入数据库里了(这次不讲爬代理网站,百度上教程很多),准备一个有效的代理池,没爬取一次 ,更换下我们的不同的代理。

继续在resource.py文件下编辑:

PROXIES = [

'1.85.220.195:8118',

'60.255.186.169:8888',

'118.187.58.34:53281',

'116.224.191.141:8118',

'120.27.5.62:9090',

'119.132.250.156:53281',

'139.129.166.68:3128'

]直接在之前的代码下加入就可以了,我这次只是写了一点点,可以加很多的。

创建customProxy.py,填写内容如下:

# -*- coding: utf-8 -*-

from workjob.middlewares.resource import PROXIES

import random

class RandomProxy(object):

def process_request(self,request, spider):

proxy = random.choice(PROXIES)

request.meta['proxy'] = 'http://%s'% proxy同样的不要忘记设置setting

转载请注明:稻香的博客 » scrapy随机useragent和随机ip访问